AI Paradigm Shift: Rich Sutton, ‘Bitter Lesson’ Author, Signals Doubts on Pure LLM Scaling

AI Paradigm Shift: Rich Sutton, ‘Bitter Lesson’ Author, Signals Doubts on Pure LLM Scaling

In a significant development shaking the foundations of AI research, Rich Sutton, the influential Turing Award winner and author of the widely cited 2019 essay ‘The Bitter Lesson,’ appears to have publicly shifted his stance on the future of pure large language models (LLMs). Sutton’s original essay, often interpreted as a rally cry for scaling general-purpose methods in AI, had been a cornerstone for advocates of ever-larger LLMs.

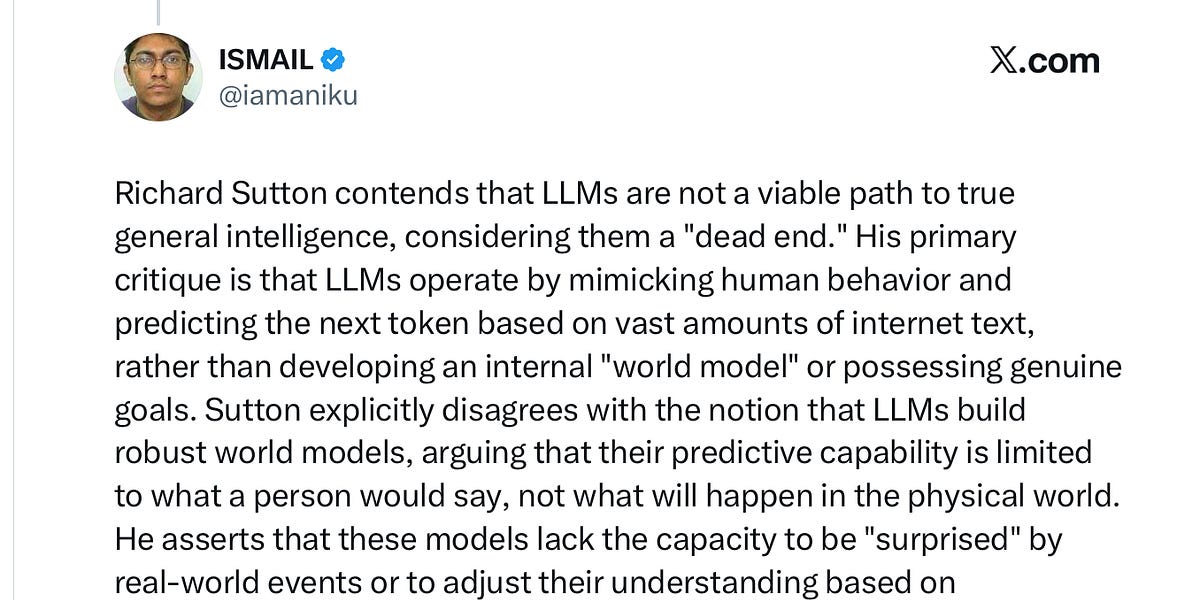

However, recent comments made by Sutton on a popular podcast indicate a departure from the unbridled optimism surrounding pure LLM scaling. This pivot, which has sent ripples through the AI community, aligns strikingly with long-standing critiques leveled by prominent AI researchers, including Gary Marcus, who has consistently argued against the overreliance on scaling as the sole path to advanced AI.

Sutton’s reevaluation suggests a growing consensus among leading thinkers that while scaling has yielded impressive results, it may not be ‘all you need.’ This sentiment echoes earlier critiques from figures like Yann LeCun and Google DeepMind CEO Sir Demis Hassabis, who have also emphasized the limitations of pure prediction models and the necessity of incorporating ‘world models’ and other architectural innovations.

The shift by Sutton, who was once seen as a patron saint of the scaling hypothesis, marks a potential turning point in AI research, signaling a renewed focus on hybrid approaches that combine the power of general-purpose methods with more structured, neurosymbolic, or reinforcement learning-based techniques to overcome the inherent limitations of current LLMs.

Disclaimer: This content is aggregated from public sources online. Please verify information independently. If you believe your rights have been infringed, contact us for removal.